近年来,基于仿射变换下的低秩矩阵恢复问题在协同过滤[1]、图像处理[2]、量子成像[3]等多个领域有重要应用,发展求解此问题的高效数值解法是当前数值最优化领域的研究热点之一。仿射秩最小化问题是指下述矩阵最优化问题

| $ \mathop {\min }\limits_X {\mathop{\rm rank}\nolimits} (\mathit{\boldsymbol{X}}),s.t.\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 \le \varepsilon ; $ | (1) |

或者其拉格朗日形式

| $ \mathop {\min }\limits_X \frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 + \lambda {\kern 1pt} {\kern 1pt} {\kern 1pt} {\mathop{\rm rank}\nolimits} (\mathit{\boldsymbol{X}})。$ | (2) |

式中:X∈Rm×n为要恢复的目标矩阵; b∈Rp为观测数据;

由于矩阵秩函数非凸、非连续,秩最小化问题是一个NP问题[5],因此需要寻找近似函数来替代矩阵的秩函数。核范数是被广泛使用的一种替代函数[1],设d=min(m, n),σi(X)为矩阵X按非增顺序排列的第i个奇异值,则核范数‖X‖*=

Wen等[7-9]提出了一种基于矩阵分解的建模方法,避免了对矩阵进行奇异值分解,但事先需要对矩阵的秩进行合理的预测。Mohan等[10]利用矩阵的Schatten-p范数来代替秩函数,提高了模型的恢复能力。本文用下述新的松弛模型来求解秩最小化问题

| $ \mathop {\min }\limits_X \frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 + \lambda \log {\kern 1pt} {\kern 1pt} {\kern 1pt} \det \left( {{{\left( {{\mathit{\boldsymbol{X}}^{\rm{T}}}\mathit{\boldsymbol{X}}} \right)}^{\frac{1}{2}}} + \gamma \mathit{\boldsymbol{I}}} \right)。$ | (3) |

其中γ≤1,并且发展了模型(3)的数值解法,证明了数值算法的收敛性,数值实验表明了模型(3)相较于核范数模型的优越性。

1 算法下述引理用于得到模型(3)的等价形式。

引理 1 设X∈Rm×n,γ≤1,则

| $ \log {\kern 1pt} {\kern 1pt} {\kern 1pt} \det \left( {{{\left( {{\mathit{\boldsymbol{X}}^{\rm{T}}}\mathit{\boldsymbol{X}}} \right)}^{\frac{1}{2}}} + \gamma \mathit{\boldsymbol{I}}} \right) = \sum\limits_{i = 1}^d {\log } \left( {{\sigma _i}(\mathit{\boldsymbol{X}}) + \gamma } \right)。$ | (4) |

证明 由矩阵行列式的性质可知

| $ \begin{array}{*{20}{c}} {\log {\kern 1pt} {\kern 1pt} {\kern 1pt} \det \left( {{{\left( {{\mathit{\boldsymbol{X}}^{\rm{T}}}\mathit{\boldsymbol{X}}} \right)}^{\frac{1}{2}}} + \gamma \mathit{\boldsymbol{I}}} \right) = \log \left( {\prod\limits_{i = 1}^d {{\sigma _i}} (\mathit{\boldsymbol{X}}) + \gamma } \right) = }\\ {\sum\limits_{i = 1}^d {\log } \left( {{\sigma _i}(\mathit{\boldsymbol{X}}) + \gamma } \right)}。\end{array} $ |

由引理1,可得到模型(3)的如下等价形式

| $ \mathop {\min }\limits_X \frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 + \sum\limits_{i = 1}^d {\log } \left( {{\sigma _i}(\mathit{\boldsymbol{X}}) + \gamma } \right)。$ | (5) |

定义 1 对于下半连续的正常函数g(x):R→R及α>0,其邻近算子Proxαg:R→R定义如下:

| $ {\mathop{\rm Pro}\nolimits} {x_{\alpha g}}(y) = \arg \mathop {\min }\limits_{x \in {\bf{R}}} \left\{ {f(x) = \alpha g(x) + \frac{1}{2}{{(x - y)}^2}} \right\}。$ | (6) |

在本文中,我们讨论y为矩阵奇异值的情况,因此只需要对y≤0进行考虑。下面的三个定理给出了模型(5)中罚函数的邻近算子的相关性质及其显式表达式。

定理 1 设g(x)=log(x+γ),γ≤1,α>0,g(x)的邻近算子满足如下性质:

① ∃c>0,∀y∈[0, c],Proxαg(y)=0。

② 当y→+∞时,对∀z∈Proxαg(y),有z→+∞且

③ 当y1 < y2时,对∀z1∈Proxαg(y1),∀z2∈Proxαg(y2),有z1 < z2。

证明 ①令h(x)=

② 当y→+∞时,对∀z∈Proxαg(y),z必为f(x)的一个驻点,即f′(z)=

| $ \mathop {\lim }\limits_{y \to + \infty } (z - y) = \mathop {\lim }\limits_{y \to + \infty } \frac{{ - \alpha }}{{z + \gamma }} = 0。$ |

③ 当y1 < y2时,对∀z1∈Proxαg(y1),∀z2∈Proxαg(y2),由邻近算子的定义,则

| $ \begin{array}{*{20}{l}} {\alpha g\left( {{z_1}} \right) + \frac{1}{2}{{\left( {{z_1} - {y_1}} \right)}^2} \le \alpha g\left( {{z_2}} \right) + \frac{1}{2}{{\left( {{z_2} - {y_1}} \right)}^2} \le }\\ {\alpha g\left( {{z_2}} \right) + \frac{1}{2}{{\left( {{z_2} - {y_2}} \right)}^2} + }\\ {\frac{1}{2}{{\left( {{y_2} - {y_1}} \right)}^2} + \left( {{z_2} - {y_2}} \right)\left( {{y_2} - {y_1}} \right) \le }\\ {\alpha g\left( {{z_1}} \right) + \frac{1}{2}{{\left( {{z_1} - {y_2}} \right)}^2} + \frac{1}{2}{{\left( {{y_2} - {y_1}} \right)}^2} + }\\ {\left( {{z_2} - {y_2}} \right)\left( {{y_2} - {y_1}} \right)} \end{array} $ |

对上述不等式化简可得z1 < z2。

定理 2 设g(x)=log(x+γ),γ≤1,α>0,当

| $ {\mathop{\rm Pro}\nolimits} {x_{\alpha g}}(y) = \left\{ {\begin{array}{*{20}{l}} {0,y \le \frac{\alpha }{\gamma }}\\ {\hat x,y > \frac{\alpha }{\gamma }} \end{array}} \right.。$ | (7) |

式中

证明 当

定理 3 设g(x)=log(x+γ),γ≤1,α>0,当

| $ {\mathop{\rm Pro}\nolimits} {x_{ag}}(y) = \left\{ {\begin{array}{*{20}{c}} {0,}&{y < {y^*}}\\ {\{ 0,\hat x\} ,}&{y = {y^*}}\\ {\hat x,}&{y > {y^*}} \end{array}} \right.。$ | (8) |

式中

证明 可求

| $ \begin{array}{*{20}{c}} {f(0) = \frac{1}{2}{y^2} + \alpha \log \gamma ;}\\ {f(\hat x) = \frac{1}{2}{{\left( {\frac{{\sqrt {{{(y + \gamma )}^2} - 4\alpha } - (y + \gamma )}}{2}} \right)}^2} + }\\ {\alpha \log \frac{{\sqrt {{{(y + \gamma )}^2} - 4\alpha } + (y + \gamma )}}{2}}。\end{array} $ |

f(0)和f(

假设存在点

综上所述,当y < y*时,Proxαg(y)=0。当y=y*时, Proxαg(y)={0,

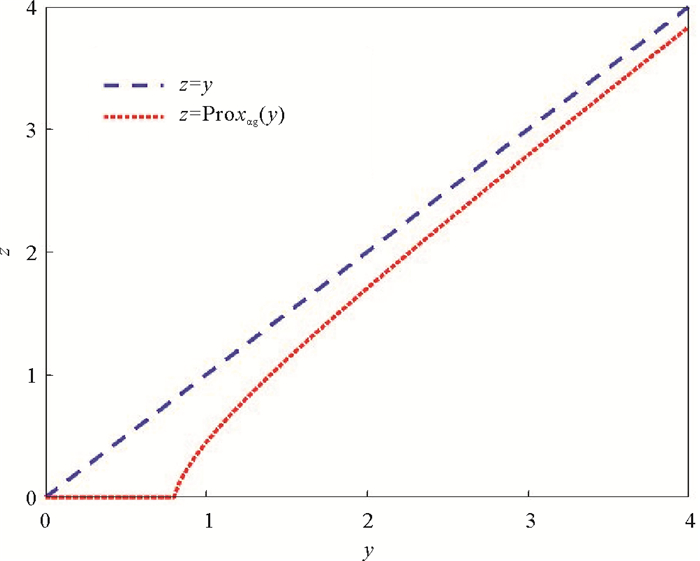

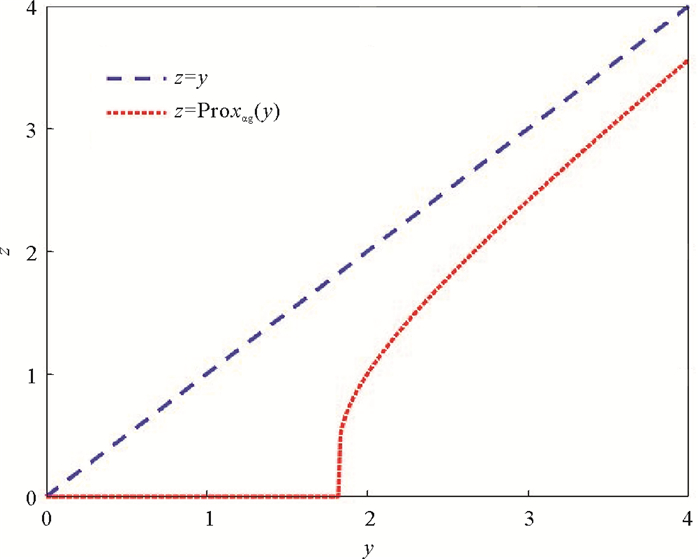

图 1和2分别画出了γ=1,α=0.8(

|

图 1 log(x+γ)的邻近算子在γ=1,α=0.8时的图像 Fig. 1 Curve of proximal operator for log(x+γ) at γ=1, α=0.8 |

|

图 2 log(x+γ)的邻近算子在γ=1,α=2时的图像 Fig. 2 Curve of proximal operator for log(x+γ) at γ=1, α=2 |

下面将g(x)的邻近算子推广到矩阵情形。设α>0,G(X)=

| $ {\mathop{\rm Pro}\nolimits} {x_{\alpha G}}(\mathit{\boldsymbol{X}}) = \arg \mathop {\min }\limits_Y \frac{1}{2}\left\| {\mathit{\boldsymbol{Y}} - \mathit{\boldsymbol{X}}} \right\|_F^2 + \alpha G(\mathit{\boldsymbol{Y}})。$ |

设UDiag(σ)VT为X的奇异值分解,由邻近算法[11]中的结论可知有ProxαG(X)的一个显式解为

| $ {\mathop{\rm Pro}\nolimits} {x_{\alpha G}}(\mathit{\boldsymbol{X}}) = \mathit{\boldsymbol{U}}{\rm{Diag}}\left( {{\mathop{\rm Pro}\nolimits} {x_{\alpha g}}\left( {{\sigma _1}} \right), \cdots ,{\mathop{\rm Pro}\nolimits} {x_{\alpha g}}\left( {{\sigma _d}} \right)} \right){\mathit{\boldsymbol{V}}^{\rm{T}}}。$ | (9) |

我们接下来讨论模型(5)的数值解法,假设为第k步迭代中的解,在第k+1步迭代中,将

| $ \begin{array}{*{20}{l}} {{\mathit{\boldsymbol{X}}^{k + 1}} \in \arg \mathop {\min }\limits_\mathit{\boldsymbol{X}} \frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 + < {\mathscr{A}^*}\left( {\mathscr{A}{\mathit{\boldsymbol{X}}^k} - b} \right),}\\ {\mathit{\boldsymbol{X}} - {\mathit{\boldsymbol{X}}^k} > + \frac{\mu }{2}\left\| {\mathit{\boldsymbol{X}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2 + \sum\limits_{i = 1}^d \lambda {\kern 1pt} {\kern 1pt} {\kern 1pt} \log \left( {{\sigma _i}(\mathit{\boldsymbol{X}}) + \gamma } \right) = }\\ {\arg \mathop {\min }\limits_X \frac{\mu }{2}\left\| {\mathit{\boldsymbol{X}} - {\mathit{\boldsymbol{X}}^k} + \frac{1}{\mu }{\mathscr{A}^*}\left( {A{\mathit{\boldsymbol{X}}^k} - b} \right)} \right\|_F^2 + }\\ {\sum\limits_{i = 1}^d \lambda \log \left( {{\sigma _i}(\mathit{\boldsymbol{X}}) + \gamma }。\right)} \end{array} $ | (10) |

式中

由(9)式我们得到迭代算法

| $ {\mathit{\boldsymbol{X}}^{k + 1}} \in {\mathop{\rm Pro}\nolimits} {x_{\frac{\lambda }{\mu }G}}\left( {{\mathit{\boldsymbol{X}}^k} - \frac{1}{\mu }{\mathscr{A}^*}\left( {\mathscr{A}{\mathit{\boldsymbol{X}}^k} - b} \right)} \right)。$ | (11) |

记上述算法为PGA算法,Zk=Xk-

令

| $ F\left( \boldsymbol{X} \right) = \frac{1}{2}\left\| {{\mathscr{A}}\boldsymbol{X} - b} \right\|_2^2 + \sum\limits_{i = 1}^d {\lambda \;\log \left( {{\sigma _i}\left( \boldsymbol{X} \right) + \gamma } \right)} $ |

下面的定理给出了上述算法的收敛性。

定理 4 设μ>λmax(

①{F(Xk)}单调递减并收敛。

②

证明 ①由(10)式可得

| $ \begin{array}{*{20}{l}} {<{\mathscr{A}^*}\left( {A{\mathit{\boldsymbol{X}}^k} - b} \right),{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k} > + \frac{1}{2}\left\| {{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2 + }\\ {\sum\limits_{i = 1}^d \lambda \log \left( {{\sigma _i}\left( {{\mathit{\boldsymbol{X}}^{k + 1}}} \right) + \gamma } \right) \le \sum\limits_{i = 1}^d \lambda \log \left( {{\sigma _i}\left( {{\mathit{\boldsymbol{X}}^k}} \right) + \gamma } \right)}。\end{array} $ | (12) |

将

| $ \begin{array}{*{20}{c}} {\frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 \le \frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 + < {\mathscr{A}^*}\left( {\mathscr{A}{\mathit{\boldsymbol{X}}^k} - b} \right),}\\ {\mathit{\boldsymbol{X}} - {\mathit{\boldsymbol{X}}^k} > + \frac{{{\lambda _{\max }}\left( {{\mathscr{A}^*}\mathscr{A}} \right)}}{2}\left\| {\mathit{\boldsymbol{X}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2} \end{array} $ |

将Xk+1带入上式,则

| $ \begin{array}{l} \frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|\frac{2}{2} + \frac{{\mu - {\lambda _{\max }}\left( {{\mathscr{A}^*}\mathscr{A}} \right)}}{2}\left\| {{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2 \le \\ \frac{1}{2}\left\| {\mathscr{A}\mathit{\boldsymbol{X}} - b} \right\|_2^2 + < {\mathscr{A}^*}\left( {\mathscr{A}{\mathit{\boldsymbol{X}}^k} - b} \right),{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k} > + \\ {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} \frac{\mu }{2}\left\| {{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2。\end{array} $ | (13) |

由式(12)和(13)可得

| $ F\left( {{\mathit{\boldsymbol{X}}^{k + 1}}} \right) + \frac{{\mu - {\lambda _{\max }}\left( {{\mathscr{A}^*}\mathscr{A}} \right)}}{2}\left\| {{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2 \le F\left( {{\mathit{\boldsymbol{X}}^k}} \right)。$ |

则

| $ F\left( {{\mathit{\boldsymbol{X}}^k}} \right) - F\left( {{\mathit{\boldsymbol{X}}^{k + 1}}} \right) \le \frac{{\mu - {\lambda _{\max }}\left( {{\mathscr{A}^*}\mathscr{A}} \right)}}{2}\left\| {{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2。$ |

因为μ>λmax(

② 设

| $ F\left( {{\mathit{\boldsymbol{X}}^1}} \right) - C \le \sum\limits_{i = 1}^\infty {\frac{{\mu - {\lambda _{\max }}\left( {{\mathscr{A}^*}\mathscr{A}} \right)}}{2}} \left\| {{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k}} \right\|_F^2, $ |

由于μ>λmax(

在本节中,我们通过矩阵填充问题来验证模型(3)的有效性和算法的可行性。算法中涉及到的奇异值分解利用PROPACK[12]计算。

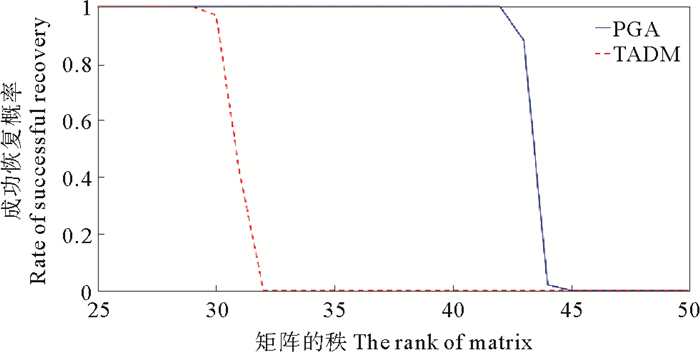

在无噪声的情况下,我们模拟模型和算法的恢复能力。假定采样率为50%,X=X1X2为目标矩阵,其中X1∈R200×r,X2∈Rr×200为元素服从均值为0,方差为1的正态分布的随机矩阵,整数r为矩阵的秩,其变化范围为25~50。设X*为算法得到的解,当相对误差满足

| $ \frac{{{{\left\| {{\mathit{\boldsymbol{X}}^*} - \mathit{\boldsymbol{X}}} \right\|}_F}}}{{{{\left\| \mathit{\boldsymbol{X}} \right\|}_F}}} \le {10^{ - 3}}, $ |

则视为恢复成功。对每个r重复100次实验,设恢复成功的次数为Sr,则定义恢复概率为Sr/100。我们将PGA算法与采用核范数的IADM[13]算法进行比较。由于侧重比较模型的恢复能力,我们希望算法能够得到充分迭代,因此设置停机准则为

| $ \frac{{{{\left\| {{\mathit{\boldsymbol{X}}^{k + 1}} - {\mathit{\boldsymbol{X}}^k}} \right\|}_F}}}{{{{\left\| {{\mathit{\boldsymbol{X}}^k}} \right\|}_F}}} \le {10^{ - 6}}。$ |

由图 3可以看出,当r≤29时,PGA和IADM算法都能够恢复成功;当r>32时,IADM算法恢复失败;而当r≤42时,PGA算法仍可恢复成功。

|

图 3 无噪声情形下的矩阵填充结果 Fig. 3 Result of matrix completion without noise |

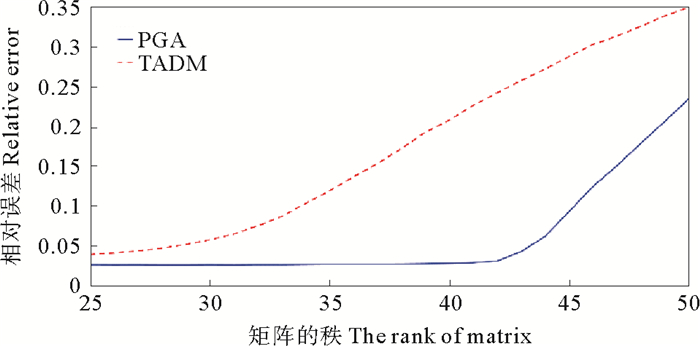

PGA算法亦适用于含噪声情况。假定采样率和目标矩阵X的构造与上述实验相同,令Xnoise=X+0.1×E,其中E为元素服从均值为0,方差为1的正态分布的随机矩阵。然后对Xnoise进行采样,矩阵的秩r变化范围为25~50。我们对每个r做20次实验得到其平均误差,并同IADM算法进行了对比。由图 4可以看出当r≤30时,IADM算法相对误差高于0.05,且相对误差逐渐增大,而PGA算法在r≤43时,相对误差仍在0.05以下。

|

图 4 有噪声情形下的矩阵填充结果 Fig. 4 Result of matrix completion with noise |

本文提出了一种矩阵秩最小化问题的松弛模型,并给出了邻近梯度下降算法,证明了算法的收敛性。通过实验表明,模型的恢复能力要高于核范数松弛,是求解原问题的一种好的模型,且在有噪声污染的情况下,算法的表现也优于核范数松弛,本研究为解决实际问题提供了一种新的途径。

| [1] |

Candès E J, Recht B. Exact matrix completion via convex optimization[J]. Foundations of Computational Mathematics, 2009, 9(6): 717-772. DOI:10.1007/s10208-009-9045-5

(  0) 0) |

| [2] |

Zhou X W, Yang C, Zhao H Y, et al. Low-rank modeling and its applications in image analysis[J]. ACM Computing Surveys, 2014, 47(2): 1-36.

(  0) 0) |

| [3] |

Gross D, Liu Y K, Flammia S T, et al. Quantum state tomography via compressed sensing[J]. Physiscal Review Letters, 2010, 105: 150401. DOI:10.1103/PhysRevLett.105.150401

(  0) 0) |

| [4] |

Candès E J, Tao T. The power of convex relaxation: Near-optimal matrix completion[J]. IEEE Transactions on Information Theory, 2010, 56(5): 2053-2080. DOI:10.1109/TIT.2010.2044061

(  0) 0) |

| [5] |

Recht B, Fazel M, Parrilo P. Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization[J]. SIAM Review, 2010, 52(3): 471-501. DOI:10.1137/070697835

(  0) 0) |

| [6] |

Candès E J, Plan Y. Matrix completion with noise[J]. Proceedings of the IEEE, 2010, 98(6): 925-936. DOI:10.1109/JPROC.2009.2035722

(  0) 0) |

| [7] |

Wen Z W, Yin W T, Zhang Y. Solving a low-rank factorization m-odel for matrix completion by a nonlinear successive over-relaxation algorithm[J]. Mathematical Programming Computation, 2012, 4(4): 333-361. DOI:10.1007/s12532-012-0044-1

(  0) 0) |

| [8] |

Tu S, Boczar R, Simchowitz M, et al. Low-rank Solutions of Linear Matrix Equations Via Procrustes Flow[C]. New York, USA: Proceedings of the 33rd International Conference on International Conference on Machine Learning, 2016: 964-973.

(  0) 0) |

| [9] |

Sun R, Luo Z. Guaranteed matrix completion via non-convex fact-orization[J]. IEEE Transactions on Information Theory, 2016, 62(11): 6535-6579. DOI:10.1109/TIT.2016.2598574

(  0) 0) |

| [10] |

Mohan K, Fazel M. Iterative reweighted algorithms for matrix rank minimization[J]. Journal of Machine Learning Research, 2012, 13(1): 3441-3473.

(  0) 0) |

| [11] |

Parikh N, Boyd S. Proximal algorithms[J]. Foundations and Trends in Optimization, 2014, 1(3): 127-239. DOI:10.1561/2400000003

(  0) 0) |

| [12] |

Larsen R M. PROPACK-software for large and sparse svd calcula-tions[EB/OL]. (2011-04-01)[2019-01-16]. http://sun.stanford.edu/rmunk/PROPACK/.

(  0) 0) |

| [13] |

Yang J F, Yuan X M. Linearized augmented lagrangian and alternating direction methods for nuclear norm minimization[J]. Mathematics of Computation, 2013, 82(281): 301-329.

(  0) 0) |

2021, Vol. 51

2021, Vol. 51